Homepage

Contact

Soshi Shimada

Max-Planck-Institut für InformatikD6: Visual Computing and Artificial Intelligence

| office: |

Campus E1 4,

Saarland Informatics Campus 66123 Saarbrücken Germany |

| email: |

sshimada [at] mpi-inf dot mpg dot de |

Experiences

-

Jun 2022 - Dec 2022:

Jun 2022 - Dec 2022:

Research intern at Google Zurich, Switzerland

-

Oct 2019 - Present:

Oct 2019 - Present:

Ph. D. candidate in Visual Computing and Artificial Intelligence department at Max-Planck-Institut für Informatik and Universität des Saarlandes, Saarbrücken, Germany

-

Oct 2017 - Sep 2019:

Oct 2017 - Sep 2019:

Research assistant at German Research Center for Artificial Intelligence (DFKI), Germany

Research Summary

- Topic: Physically plausible monocular 3D human motion capture and synthesis with interactions.

- Approach: My works include reconstructing 3D human motions from an RGB sequence explicitly integrating 1) physics equations and/or 2) modelling of interactions with the environment in the motion capture pipeline for improved realism. Furthermore, my recent work extends beyond capturing human motions alone and includes surface deformations resulting from self-interactions (e.g., punching a face) from an RGB video. Additionally, one of my projects involves the diffusion model based synthesis of hand-object interactions.

- Publications: CVPR, ECCV, ICCV, SIGGRAPH, SIGGRAPH Asia, TPAMI, etc.

Research Interests

- Physics based 3D Human motion capture

- Motion capture with interactions

- Rigid body dynamics

- Non-Rigid surface deformation capture

- Machine learning

Publications

2024

2019

|

MACS: Mass Conditioned 3D Hand and Object Motion Synthesis S. Shimada, F. Mueller, J. Bednařík, B. Doosti, B. Bickel, D. Tang, V. Golyanik, J. Taylor, C. Theobalt and T. Beeler. Accepted at International Conference on 3D Vision (3DV), 2024 [project page] [paper] |

|

Decaf: Monocular Deformation Capture for Face and Hand Interactions S. Shimada, V. Golyanik, P. Pérez and C. Theobalt. ACM Transactions on Graphic (SIGGRAPH Asia), 2023. [project page] [paper] [dataset] |

|

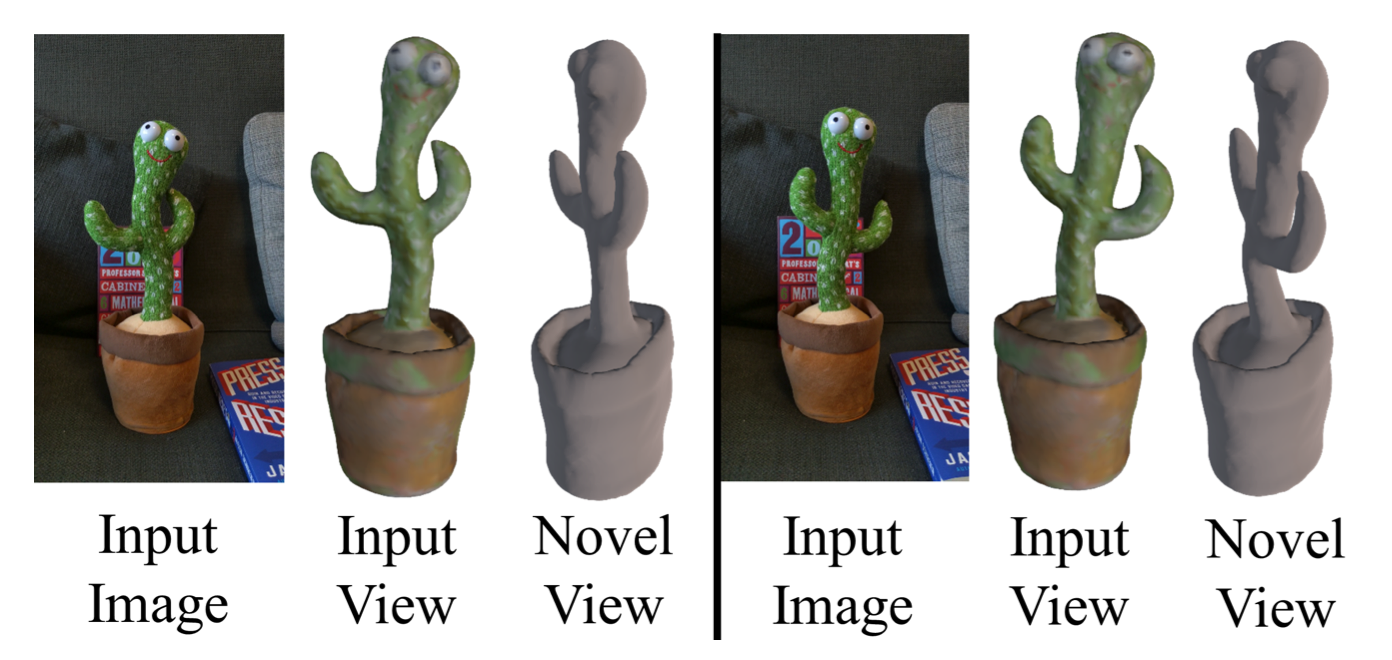

Unbiased 4D: Monocular 4D Reconstruction with a Neural Deformation Model

E. Johnson, M. Habermann, S. Shimada, V. Golyanik and C. Theobalt

Accepted at Computer Vision and Pattern Recognition Workshop (CVPRW), 2023. [project page] [paper] |

|

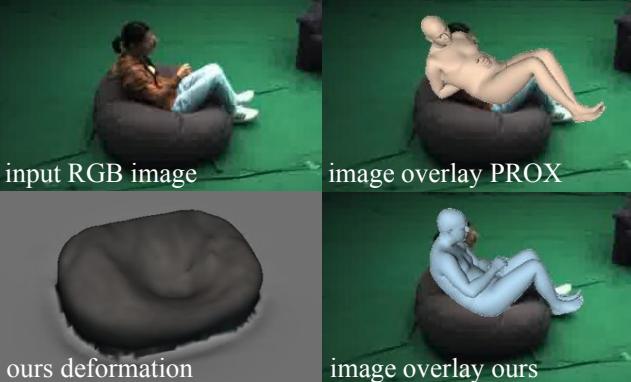

MoCapDeform: Monocular 3D Human Motion Capture in Deformable Scenes

Z. Li, S. Shimada, B. Schiele, C. Theobalt and V. Golyanik

Accepted at 3D Vision (3DV), 2022. (Best Student Paper Award) [project page] [paper] |

|

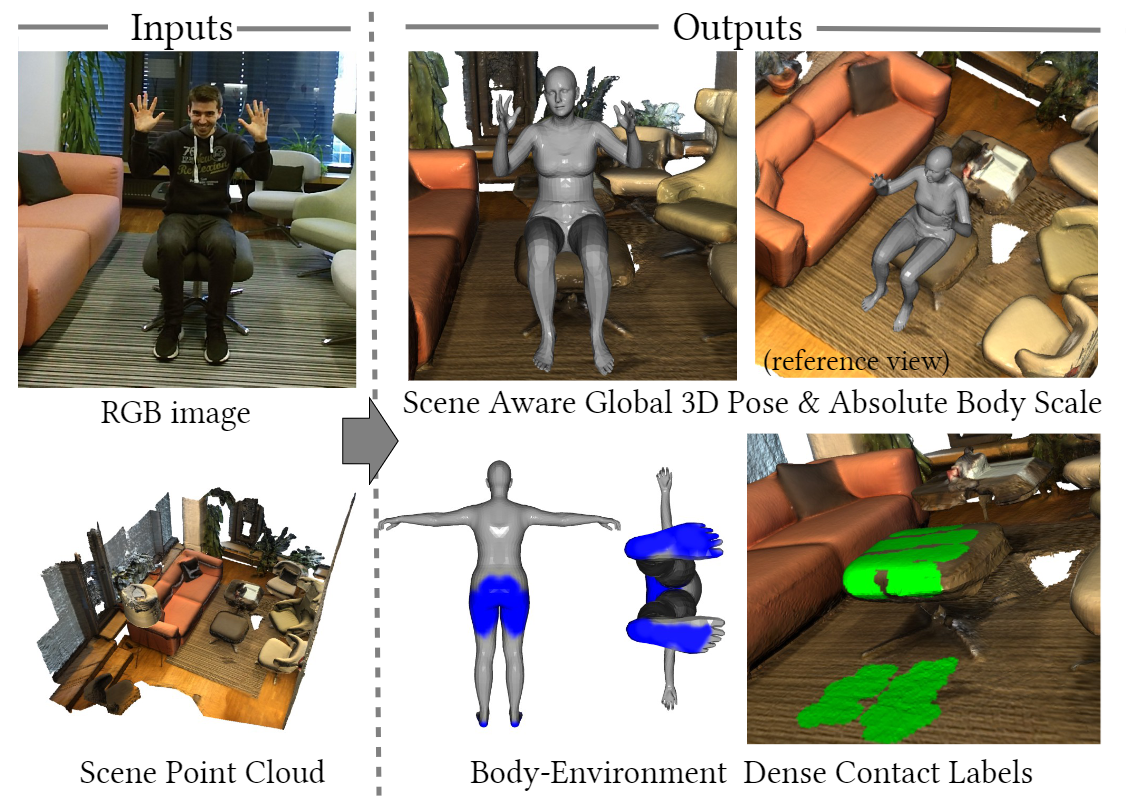

HULC: 3D Human Motion Capture with Pose Manifold Sampling and Dense Contact Guidance S. Shimada, V. Golyanik, Z Li, P. Pérez, W. Xu, and C. Theobalt Accepted at European Conference on Computer Vision (ECCV), 2022. [project page] [paper] |

|

UnrealEgo: A New Dataset for Robust Egocentric 3D Human Motion Capture H. Akada, J. Wang ,S. Shimada, M. Takahashi, C. Theobalt and V. Golyanik Accepted at European Conference on Computer Vision (ECCV), 2022. [project page] |

|

Physical Inertial Poser (PIP): Physics-aware Real-time Human Motion Tracking from Sparse Inertial Sensors X. Yi, Y. Zhou, M. Habermann, S. Shimada, V. Golyanik, C. Theobalt, and F. Xu Accepted at Computer Vision and Pattern Recognition (CVPR), 2022. (Best Paper Finalist) [paper] [project page] |

|

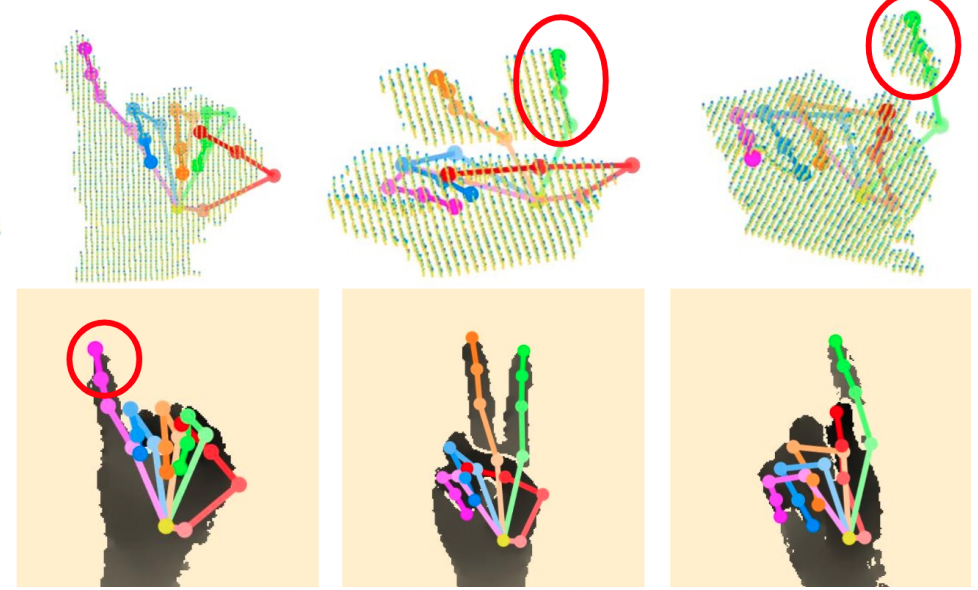

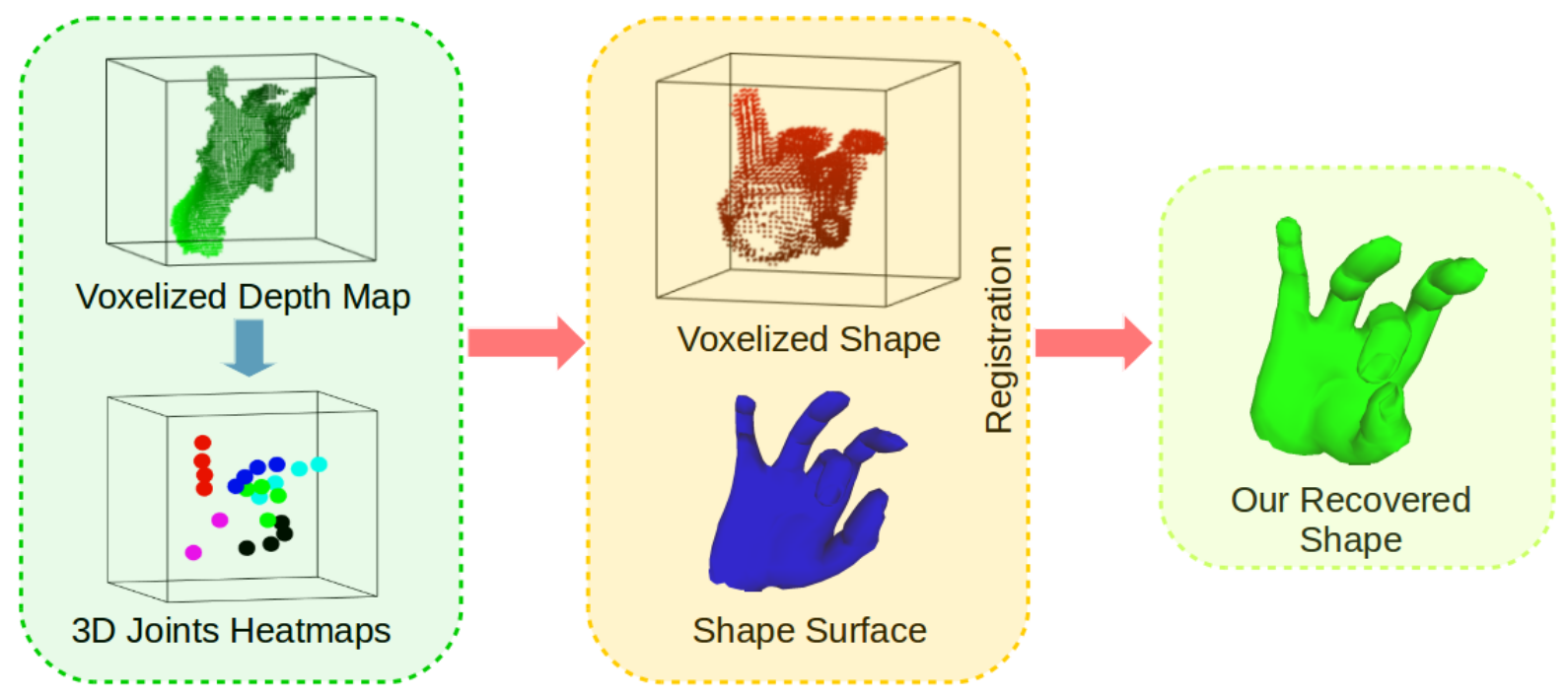

HandVoxNet++: 3D Hand Shape and Pose Estimation using Voxel-Based Neural Networks J. Malik S. Shimada, A. Elhayek, S. Ali, C. Theobalt, V. Golyanik and D. Stricker, Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 2021. [paper] [project page] |

|

Gravity-Aware 3D Human-Object Reconstruction R. Dabral, S. Shimada, A. Jain, C. Theobalt and V. Golyanik, In International Conference on Computer Vision (ICCV), 2021. [paper] [project page] [code] [dataset] |

|

|

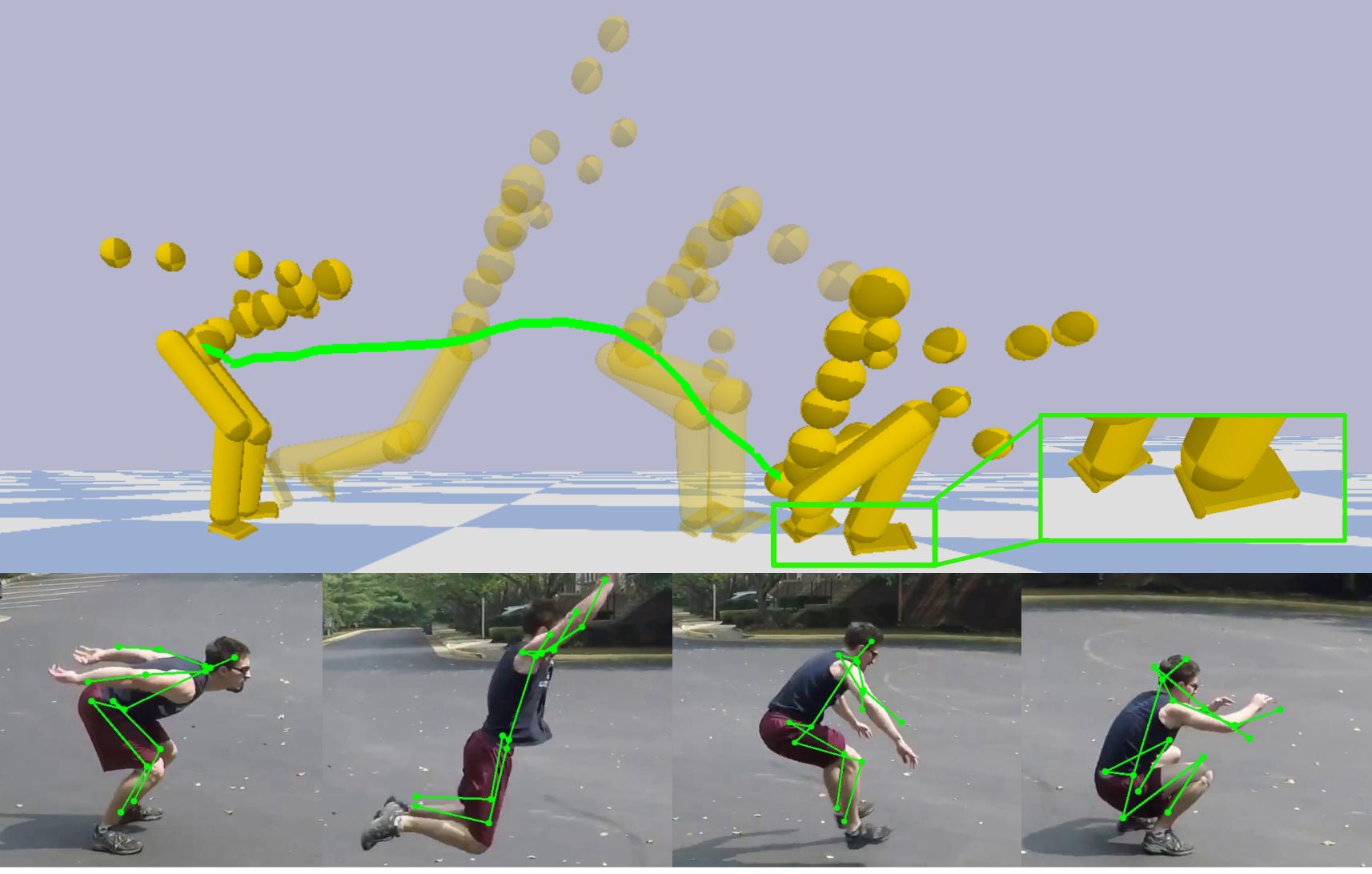

Neural Monocular 3D Human Motion Capture with Physical Awareness S. Shimada, V. Golyanik, W. Xu, P. Pérez and C. Theobalt. ACM Transactions on Graphic (SIGGRAPH), 2021. [paper] [project page] |

|

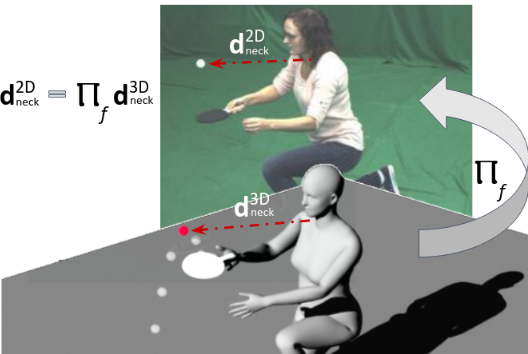

PhysCap: Physically Plausible Monocular 3D Motion Capture in Real Time S. Shimada, V. Golyanik, W. Xu and C. Theobalt. ACM Transactions on Graphic (SIGGRAPH Asia), 2020. [paper] [project page] [arXiv] |

|

|

Fast Simultaneous Gravitational Alignment of Multiple Point Sets V. Golyanik, S. Shimada and C. Theobalt. In International Conference on 3D Vision (3DV), 2020 (Oral) [paper] [project page] |

|

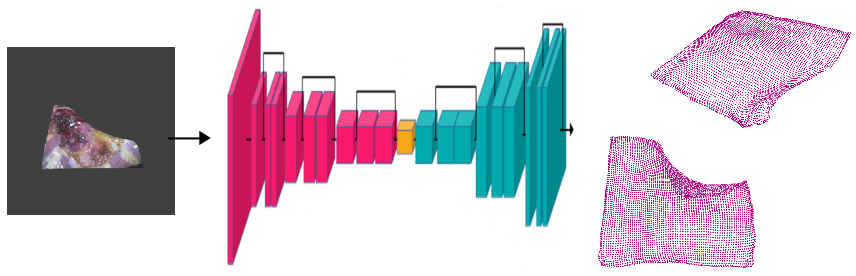

HandVoxNet: Deep Voxel-Based Network for 3D Hand Shape and Pose Estimation from a Single Depth Map J. Malik, I. Abdelaziz, A. Elhayek, S. Shimada, S. A. Ali, V. Golyanik, C. Theobalt and D. Stricker. Accepted in Computer Vision and Pattern Recognition (CVPR), 2020. [paper] [supplement] [project page] [arXiv] |

2019

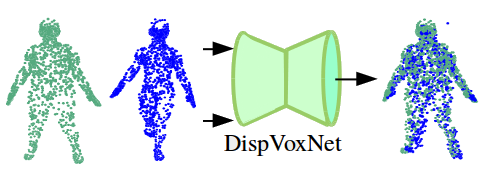

|

DispVoxNets: Non-Rigid Point Set Alignment with Supervised Learning Proxies. S. Shimada, V. Golyanik, E. Tretschk, D. Stricker and C. Theobalt. In International Conference on 3D Vision (3DV), 2019; Oral [paper] [poster] [project page] [arXiv] |

|

IsMo-GAN: Adversarial Learning for Monocular Non-Rigid 3D Reconstruction. S. Shimada, V. Golyanik, C. Theobalt and D. Stricker. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), 2019; Oral [paper] [code] [arXiv] |

|

HDM-Net: Monocular Non-Rigid 3D Reconstruction with Learned Deformation Model. V. Golyanik, S. Shimada, K. Varanasi and D. Stricker. In International Conference on Virtual Reality and Augmented Reality (EuroVR) 2018; Oral (Long Paper) [paper] [HDM-Net data set] |

Education

- October 2019 - present:

Ph. D. candidate in Computer Science at the Universität des Saarlandes, Saarbrücken, Germany and the Max-Planck-Institut für Informatik

- 2017 - 2019:

Master Studies in Computer Science at the University of Kaiserslautern

Specialization: Intelligent System - 2011 - 2015:

Bachelor Studies in Computer Science and Engineering at Waseda Unversity