Overview

In traditional video, the viewpoint in a scene is the one chosen by the director. It

cannot be changed by the viewer while he is watching the video.

The goal in free-viewpoint video, on the other hand, is to create a feeling of immersion

by offering the viewer the opportunity to

interactively change its viewpoint in the scene.

The human body and its motion plays a central role in most visual media

and its structure can be exploited for robust motion estimation and efficient

visualization. In our work we develop a system that uses multi-view

synchronized video footage of an actor's performance to

estimate motion parameters and to interactively re-render the

actor's appearance from any viewpoint.

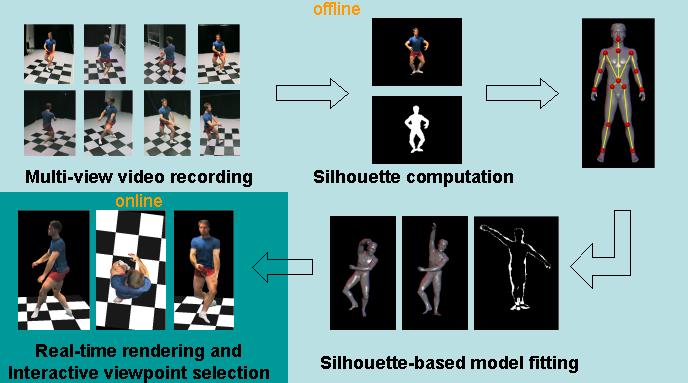

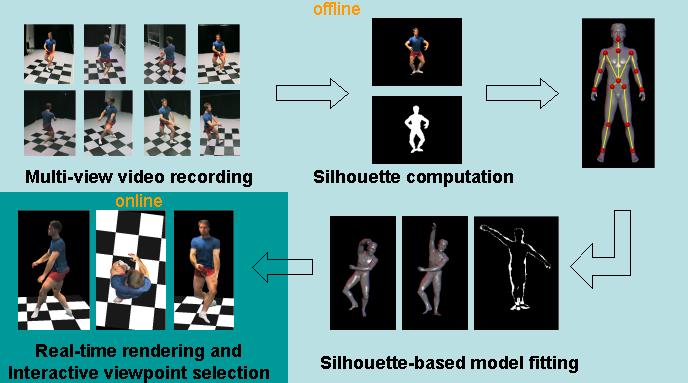

The system consists of an off-line and an on-line component (see Fig. 1).

In a first step, a multi-view video sequence of a person is recorded in our acquisition room.

The shape of an a priori body model is then adapted to match the body shape of recorded person.

The silhouettes of the person in each camera view are used to estimate the parameters

of human motion by means of a model-based non-intrusive human motion capture algorithm.

The motion capture system runs off-line and robustly copes with a large range of human motion.

For realistic surface appearance, time-varying multi-view textures are created from the video

frames at each time step. This way, time-dependent changes in the body surface are reproduced

in high detail.

The rendering system runs at real-time frame rates using ubiquous

graphics hardware, yielding a highly naturalistic impression of the actor.

The actor can be placed in virtual environments to create composite dynamic

scenes. Free-viewpoint video allows the creation of camera fly-throughs or

viewing the action interactively from arbitrary perspectives.

|

| Figure: 1:

Overview of the system components

|

Home

/ Research Units

/ AG4: Home Page

/ Research Areas

Max-Planck-Institut für Informatik

About the Institute |

Research Units |

News & Activities |

Location |

People |

Services |

Search the Site |

Intranet

Copyright © 1998-2002 by Max-Planck-Institut für Informatik. All rights reserved. Impressum and legal notices.

Page maintained by Christian Theobalt <theobalt@mpi-sb.mpg.de>

www site design and concept by Uwe Brahm

Document last changed on Monday, August 25 2003 - 19:00