Homepage

Contact

Diogo Carbonera Luvizon

Max-Planck-Institut für InformatikDepartment 6: Visual Computing and Artificial Intelligence

| office: |

Campus E1 4,

Room 223 Saarland Informatics Campus 66123 Saarbrücken Germany |

|---|---|

| email: |

Get my email address via email |

| phone: | +49 681 9325 4540 |

| fax: | +49 681 9325 4099 |

News

- Feb. 2024: Three papers accepted to CVPR 2024! More details coming soon.

- Oct. 2023: One paper accepted to 3DV 2024!

- Jul. 2023: Patent granted by the USPTO! [ link ]

- Mai. 2023: SSP-Net accepted to Pattern Recognition!

- Mar. 2023: One paper accepted to CVPR 2023!

- Feb. 2023: One paper accepted to EUROGRAPHICS 2023!

Research Interests

- Computer Vision & Computer Graphics

- Human Pose Estimation and Action Recognition

- 3D Scene Understanding

Publications

|

Relightable Neural Actor with Intrinsic Decomposition and Pose Control

D. Luvizon,

V. Golyanik,

A. Kortylewski,

M. Habermann,

C. Theobalt

ECCV 2024 (TBP).

Description:

In this work we present Relightable Neural Actor, a new video-based method for learning a pose-driven neural human model that can be relighted, allows appearance editing, and models pose-dependent effects such as wrinkles and self-shadows. For training, our method solely requires a multi-view recording of the human under a known, but static lighting condition. For more details, please check out the paper and project page.

[ arxiv ]

[ project page ]

[ code ]

[ dataset ]

|

|

EventEgo3D: 3D Human Motion Capture from Egocentric Event Streamst

C. Millerdurai,

H. Akada,

J. Wang,

D. Luvizon,

C. Theobalt,

V. Golyanik

CVPR 2024.

Description:

In this work we propose EventEgo3D, the first method for 3D human motion capture from an egocentric monocular event camera with a fisheye lens.

Our method handles event streams with high temporal resolution running at 140Hz and is able to perform 3D human motion capture under high-speed human motions

and rapidly changing illumination.

We design a prototype of a mobile head-mounted device with an event camera and record a real event-based dataset with ground-truth 3D human poses, in addition to the synthetic dataset.

[ arxiv ]

[ project page ]

[ code ]

|

|

Egocentric Whole-Body Motion Capture with FisheyeViT and Diffusion-Based Motion Refinement

J. Wang,

Z. Cao,

D. Luvizon,

L. Liu,

K. Sarkar,

D. Tang,

T. Beeler,

C. Theobalt,

CVPR 2024.

Description: In this paper, we propose a novel approach for egocentric whole-body motion capture using a single fisheye camera.

Our method leverages FisheyeViT and simultaneously estimates human body and hand motion.

For hand tracking, we incorporate dedicated hand detection and hand pose estimation networks for regressing 3D hand poses.

Finally, we develop a diffusion-based whole-body motion prior model to refine the estimated whole-body motion while accounting for joint uncertainties.

We also collect a large synthetic dataset, EgoWholeBody, comprising 840,000 high-quality egocentric images captured across a diverse range of whole-body motion sequences.

[ paper ]

[ project page ]

[ arxiv ]

|

|

Holoported Characters: Real-time Free-viewpoint Rendering of Humans from Sparse RGB Cameras Rendering

A. Shetty,

M. Habermann,

G. Sun,

D. Luvizon,

V. Golyanik,

C. Theobalt,

CVPR 2024.

Description: This is the first approach to render highly realistic free-viewpoint videos of a human actor in general apparel, from sparse multi-view recording to display, in real-time at an unprecedented 4K resolution.

[ paper ]

[ project page ]

[ arxiv ]

|

|

3D Pose Estimation of Two Interacting Hands from a Monocular Event Camera

C. Millerdurai, D. Luvizon, V. Rudnev, A. Jonas, J. Wang, C. Theobalt, V. Golyanik

International Conference on 3D Vision (3DV), 2024

Description: This paper introduces the first framework for 3D tracking of two fast-moving and interacting hands from a single monocular event camera.

[ paper ]

[ project page ]

[ code ]

|

|

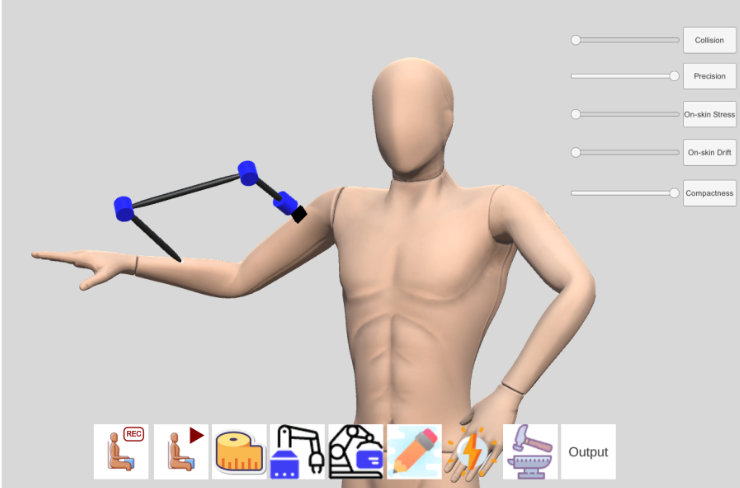

WRLKIT: Computational Design of Personalized Wearable Robotic Limbs

A. S. Abadian, A. Otaran, M. Schmitz, M. Muehlhaus, R. Dabral, D. Luvizon, A. Maekawa, M. Inami, C. Theobalt, J. Steimle

ACM Conference on User Interface Software and Technology (UIST), 2023

Description: In this paper, we present WRLKit, an interactive computational design approach that enables designers to rapidly prototype a personalized Wearable Robotic Limb (WRL) without requiring extensive robotics and ergonomics expertise.

[ paper ]

[ project page ]

|

|

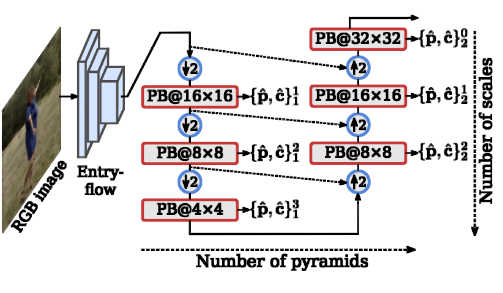

SSP-Net: Scalable Sequential Pyramid Networks for Real-time 3D Human Pose Regression

D. Luvizon, H. Tabia, D. Picard

Pattern Recognition, Volume 142, Oct. 2023

Description: In this paper, we propose a highly scalable convolutional neural network (SSP-Net) for real-time 3D human pose regression from a single image. The SSP-Net is trained with refined supervision at multiple scales in a sequential manner. Our network requires a single training procedure and is capable of producing its best predictions at 120 frames per second (FPS), or acceptable predictions at more than 200 FPS when cut at test time. We show that the proposed regression approach is invariant to the size of feature maps, allowing our method to perform multi-resolution intermediate supervisions.

[ paper ]

[ arXiv ]

|

|

Scene-aware Egocentric 3D Human Pose Estimation

J. Wang, D. Luvizon, W. Xu, L. Liu, K. Sarkar, and C. Theobalt

CVPR 2023.

Description:

In this work, we propose to estimate egocentric human pose guided by scene constraints. We devise a new egocentric scene depth estimation network from a wide-view egocentric fisheye camera that estimates the depth behind the human with a depth-inpainting network. Our pose estimation model projects 2D image features and estimated scene depth into a common voxel space and regresses the 3D pose with a V2V network. We also generated a synthetic dataset, EgoGTA, and an in-the-wild dataset based on EgoPW, EgoPW-Scene.

[ paper ]

[ IEEE/CVF ]

[ arXiv ]

[ project page ]

[ code ]

|

|

Scene-Aware 3D Multi-Human Motion Capture from a Single Camera

D. Luvizon, M. Habermann, V. Golyanik, A. Kortylewski and C. Theobalt

Eurographics 2023.

Description:

We introduce the first non-linear optimization-based approach that jointly solves for the absolute 3D position of each human, their articulated pose, their individual shapes as well as the scale of the scene. Given the per-frame 3D estimates of the humans and scene point-cloud, we perform a space-time coherent optimization over the video to ensure temporal, spatial and physical plausibility. We consistently outperform previous methods and we qualitatively demonstrate that our method is robust to in-the-wild conditions including challenging scenes with people of different sizes.

[ arXiv ]

[ project page ]

[ source code ]

|

|

HandFlow: Quantifying View-Dependent 3D Ambiguity in Two-Hand Reconstruction with Normalizing Flow

J. Wang, D. Luvizon, F. Mueller, F. Bernard, A. Kortylewski, D. Casas, C. Theobalt

VMV 2022, Best Paper Honorable Mention

Description: This work presents the first probabilistic method to estimate a distribution of plausible two-hand poses given a monocular RGB input. It quantitatively shows that existing deterministic methods are not suited for this ambiguous task. In this work, we demonstrate the quality of our probabilistic reconstruction and show that explicit ambiguity modeling is better-suited for this challenging problem

[ arXiv ]

[ project page ]

|

|

Estimating Egocentric 3D Human Pose in the Wild with External Weak Supervision

J. Wang, L. Liu, W. Xu, K. Sarkar, D. Luvizon, C. Theobalt

CVPR 2022

Description: We present a new egocentric pose estimation method that can be trained with weak external supervision. To facilitate the network training, we propose a novel learning strategy to supervise the egocentric features with high-quality features extracted by a pretrained external-view pose estimation model. We also collected a large-scale in-the-wild egocentric dataset called Egocentric Poses in the Wild (EgoPW) with a head-mounted fisheye camera and an auxiliary external camera, which provides additional observation of the human body from a third-person perspective.

[ paper ]

[ arXiv ]

[ project page ]

[ data ]

|

|

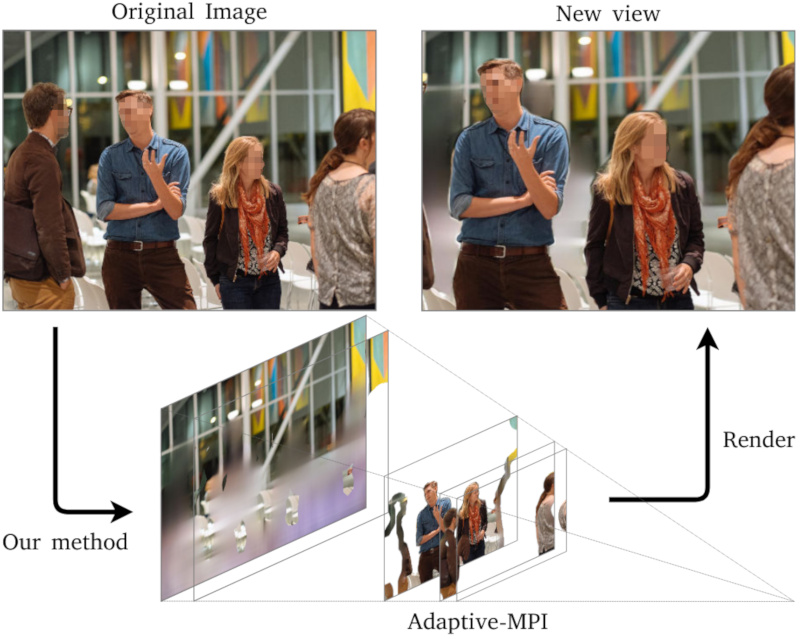

Adaptive multiplane image generation from a single internet picture

Diogo C. Luvizon, Gustavo Sutter P Carvalho, Andreza A. dos Santos, Jhonatas S. Conceicao, Jose L. Flores-Campana, Luis G.L. Decker, Marcos R. Souza, Helio Pedrini, Antonio Joia, Otavio A.B. Penatti

WACV 2021, CVPR 2021 Workshop Learning to Generate 3D Shapes and Scenes

Description: In this paper, we address the problem of generating an efficient multiplane image (MPI) from a single high-resolution picture. We present the adaptive-MPI representation, which allows rendering novel views with low computational requirements. To this end, we propose an adaptive slicing algorithm that produces an MPI with variable number of image planes. We also present a new lightweight CNN for depth estimation, which is learned by knowledge distillation from a larger network. Occluded regions in the adaptive-MPI are inpainted also by a lightweight CNN.Our method is capable of producing high-quality predictions with one order of magnitude less parameters, when compared to previous approaches.

[ paper ]

[ arXiv ]

[ CVPRW'21 link ]

|

Recent Positions

- 2021 - current: Postdoctoral Researcher at MPI-INF VCAI

- 2019 - 2021: Research Scientist at Samsung SRBR

- 2011 - 2014: Electronic Engineer at Ensitec Tecnologia

Education

- 2015 - 2019: PhD Student at ETIS / Cergy-Paris Université (France), Individual Grant 233342/2014-1 CNPq

- 2013 - 2015: MSc Degree in Applied Computing & Computer Vision, UTFPR/DAINF (Brazil)

- 2007 - 2011: BSc Degree in Electronics Engineering, UTFPR (Brazil)